Many consider speech recognition to be the next ‘killer app’ for mobile. Apple famously (or infamously, depending on how much you’ve used it) added Siri in iOS 6 and Android responded with Google Now. So how do you add speech recognition to an enterprise mobile application built on the Salesforce Touch Platform? I thought you’d never ask!

We recently hosted a webinar introducing the AT&T Toolkit for Salesforce Platform and I demoed a simple mobile application built using Visualforce and the Salesforce Mobile SDK that uses the AT&T toolkit to search for Case records in Salesforce based on a user’s voice input. You can jump to this point in the webinar recording to watch a demo of the application. The full code base for that application is also available on Github. Let’s dissect and breakdown the application architecture and code.

Application Architecture

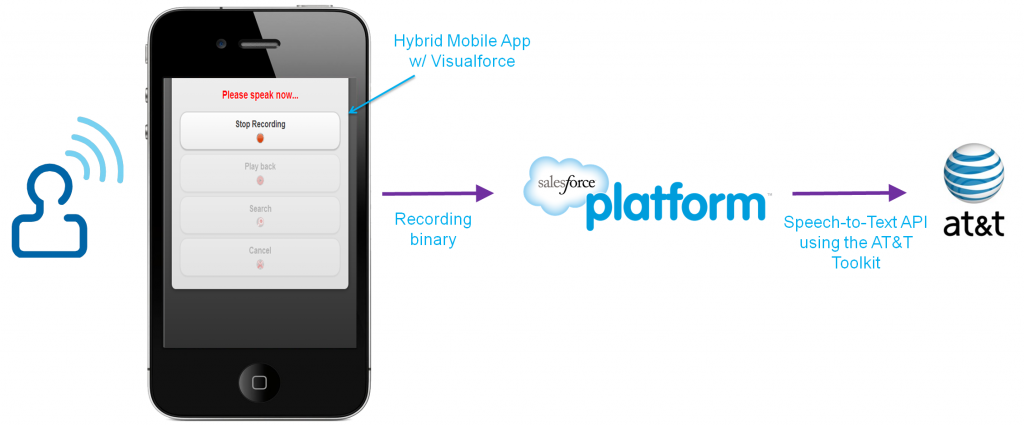

The figure below describes the high-level architecture for the application.

The app is built using Visualforce and JQuery Mobile and displays all Cases assigned to the currently logged in User. I then used the Salesforce Hybrid Mobile SDK to create a hybrid version of the app to install on an iPhone or Android device. When the user clicks the voice search button, the app starts capturing the microphone input from the device. The recording binary is then sent over to the Apex controller for the page. In the controller we use the AT&T Toolkit to invoke the AT&T Speech-to-Text API. AT&T translates the voice input into text and returns the results back to the controller. Lastly, we perform a SOQL search based on the translated text and return any matching Case records to the mobile app where they are displayed to the user.

What is the AT&T Toolkit for Salesforce?

AT&T has an extensive library of public APIs that developers can use to build enterprise apps and solutions. Developers can now access those APIs natively from the Force.com platform with the AT&T Toolkit for Salesforce Platform. The toolkit provides strongly-typed Apex wrappers for RESTful AT&T APIs like speech-to-text, SMS, location, payment and more.

Will this Speech-to-text App only work for AT&T subscribers?

The short answer – no. Here’s the longer version. As mentioned earlier, the application uses the AT&T Toolkit to perform the speech-to-text conversion. However, the AT&T Speech-to-text API is carrier agnostic. An app does NOT have to run on an AT&T device in order to invoke the API. In that sense, the AT&T Speech-to-text API is no different from say the Nuance API and can be invoked from any mobile device, no matter the underlying OS (Android, iOS etc.) or carrier.

It is also important to note that this carrier agnostic nature is not true of all the AT&T APIs supported by the toolkit. The SMS and Location APIs for example only work with AT&T devices.

Developing the app

Lets now review the key components of the app and the step-by-step process of creating it.

Installing the AT&T toolkit

The first step is to install and configure the AT&T Toolkit in your DE or Sandbox Org. Since the toolkit is available as an unmanaged package, this step should take no more than a few minutes. You next have to create a free AT&T Developer account and configure a couple of things on the AT&T and Salesforce sides.

Building the Visualforce app

The next step is building the Visualforce page that forms the heart of the application. In addition to the voice search feature, the page provides a simple list-detail view of all Cases that are assigned to the logged in user. The CaseDemo.page is HTML5 compliant and uses JQuery Mobile to provide the general look-and-feel and navigation for the application. For a more detailed look at building a mobile friendly, list-detail HTML5 web view using Visualforce, JQuery Mobile and Javascript Remoting, check out my blog series on the Cloud Hunter mobile application.

Building a Hybrid mobile app

At this point we have a pure web application that can be rendered on the mobile browser of any smartphone. This is great since we were able to leverage our existing web development skills (i.e. HTML, Javascript and CSS) while also being cross-platform. However, we need to capture the microphone input from the mobile device in order to implement our voice search requirement. This is currently not possible with a 100% web application and you need either a native or hybrid app in order to access device features like the microphone and camera. Hybrid apps put a thin native ‘wrapper’ around a web application giving you all the benefits of web mobile development combined with access to device features like the microphone. We’ll next create a hybrid mobile application from the CaseDemo Visualforce page using the Salesforce Mobile SDK.

This article walks you through the steps for creating an iOS hybrid app from a Visualforce page using the Mobile SDK. During the webinar I demoed a hybrid iOS version of the application built that way. However one of the advantages of hybrid mobile development is that you can also create an Android version of the same Visualforce page. You can refer to this blog post for how to create an Android hybrid application using the Mobile SDK.

Recording audio using PhoneGap/Cordova

With our hybrid application in place, we can now record the user’s voice input. The critical piece of technology that enables device access in a hybrid application is an open source project called Apache Cordova (also known as PhoneGap). Cordova/PhoneGap exposes device functions like the Camera and microphone as JavaScript functions. The Salesforce Mobile SDK bundles Cordova (v2.3.0 as of Mobile SDK v1.5) and all you have to do is to import the Cordova JavaScript library in your Visualforce page. This post describes a way of using Dynamic Visualforce Components to include the correct version of the Cordova JS file depending on whether the page is being accessed from an Android or iOS device.

Now that we’ve included the Cordova JS library, lets see how the application captures the microphone input. The snippet below from the CaseDemo.page shows how we use the Cordova Media API to record the user’s voice input.

A simple call to the startRecord function of the Cordova Media object (line 17) starts recording the voice input. Once the user is done speaking, they press the ‘Stop Recording’ button on the page and the following JS function is invoked.

After invoking the stopRecord() function, the recording is saved as a binary file on the mobile device (WAV format in the case of iOS and AMR format in the case of Android). We then convert the binary recording into a Base64 encoded string on line 6. At this point the user can play back the recording to confirm and review it. Here is the JS function that gets invoked when the user invokes the ‘Play back’ button on the page.

Speech-to-text using the AT&T toolkit

Finally, lets review what happens when the user invokes the ‘Search’ button to perform a search for matching Case records in Salesforce.

The voiceSearch() JS function sends over the Base64 encoded version of the voice recording to the ‘searchCases’ Apex controller method via a Javascript Remoting call. Lets look at that method of the CaseDemoController class next.

Line 9 shows the first use of the AT&T toolkit. As mentioned earlier, the toolkit provides wrapper Apex classes for invoking AT&T API’s like Speech-to-text, SMS and more. Developers don’t have to worry about the underlying plumbing of creating and parsing JSON messages, invoking the AT&T RESTful APIs, handling authentication etc. – the toolkit abstracts all that away. The AttSpeech class for example is the wrapper class for invoking the AT&T Speech-to-Text API. Lines 12-14 set the various inputs required to invoke the API, not least of which is the binary recording received from the mobile device. We then invoke the convert() method of the AttSpeech class (line 16) to invoke the API and the translated text is returned as a AttSpeechResult object. Finally, we perform a simple SOQL query to find any Case records whose parent Account name matches the translated text and return the result to the Visualforce page for display to the user.

Hopefully this blog post has gotten your creative juices flowing about what’s possible when building mobile apps on the Salesforce Touch Platform and then enhancing them with AT&T mobility services like Speech-to-Text. Happy coding.